The Rise of IoT...

Of all the many and varied changes in technology that have emerged over the past decade, the Internet of Things (IoT) is potentially one having biggest impact on society. The IoT devices shall rise to a whopping 75.44 billion by 2025. That's roughly 10 IoT devices per living human on planet ! Not only are these devices all connected to the internet, but many of them will also be talking to each other. The sheer volume of data that will come from all these billions of devices is one factor that needs addressing for organizations hoping to make sense of it, but also the actual relevance of that data.

For consumers this offers a number of benefits - ever more personalized products, a deeper insight into health and fitness, greater convenience and ultimately a much better user experience. For businesses, it means yet more data - much more data, much much more data, estimated to be around 175 ZB by 2025.

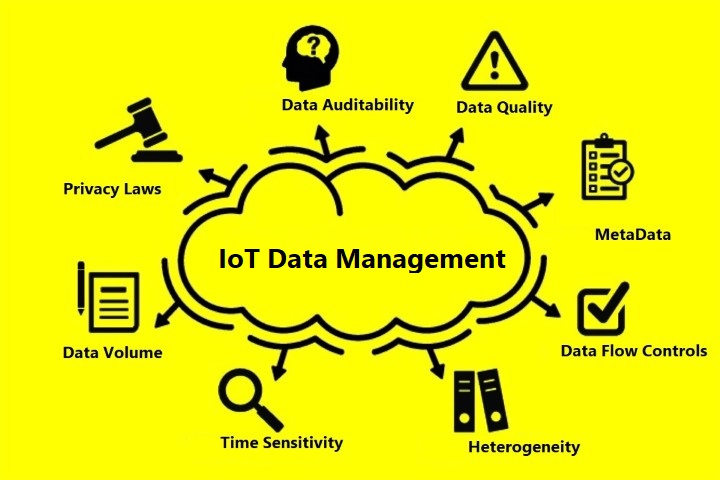

Managing all of this IoT data is set to be a major task for many businesses and the rise and growth of IoT data comes with a number of associated challenges. Many organizations will lack the architectures, policies and technologies that address the full data life cycle. Current approaches and infrastructures will need to be overhauled and / or scaled in order to get the most from IoT data.

There is also the question of immediacy with IoT. Data is generated so quickly and has such a short shelf-life, storage becomes a problem. IoT is dependent on fast data and immediate insight, and connecting a wide range of devices can make real-time processing and analysis that much harder.

With GDPR and similar data privacy laws from many leading countries; organizations need to demonstrate that they take appropriate care with every single piece of data coming into the business pipeline. Non-compliance penalties are severe. Unfortunately, the solutions to manage and utilize the massive volume of data produced by these things are yet to mature.

The vision that the IoT should strive to achieve is to provide a standard platform for developing cooperative services and applications that harness the collective power of resources available through the individual Things and any subsystems designed to manage the aforementioned Things. A comprehensive management framework of data that is generated and stored by the objects within IoT is thus needed to achieve this goal.

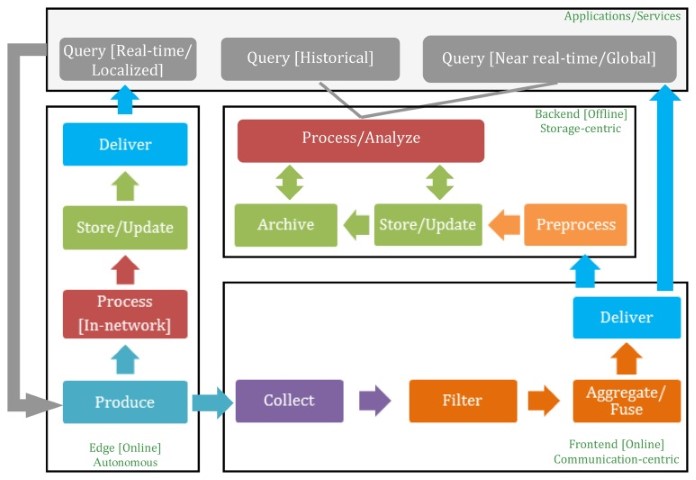

In the context of IoT, data management should act as a layer between the objects and devices generating the data and the applications accessing the data for analysis purposes and services.

IoT data has distinctive characteristics that make traditional relational-based database management an obsolete solution. A massive volume of heterogeneous, streaming and geographically-dispersed real-time data will be created by billions of diverse devices periodically sending observations about certain monitored phenomena or reporting the occurrence of certain or abnormal events of interest. Communication, storage and process will be defining factors in the design of data management solutions for IoT.

5 must-have capabilities for IoT data management

Managing data from IoT devices is an important aspect of a real-time analytics journey. To be sure your data management solution can handle IoT data demands, look for these five key capabilities:

1. Versatile connectivity and ability to handle data variety: IoT systems have a variety of standards and IoT data adheres to a wide range of protocols (MQTT, OPC, AMQP, and so on). Also, most IoT data exists in semi-structured or unstructured formats. Therefore, your data management system must be able to connect to all of those systems and adhere to the various protocols so you can ingest data from those systems. It is equally important that the solution support both structured and unstructured data.

2.Edge processing and enrichments: A good data management solution will be able to filter out erroneous records coming from the IoT systems such as negative temperature readings - before ingesting it into the data lake. It should also be able to enrich the data with metadata (such as timestamp or static text) to support better analytics.

3.Big data processing and machine learning: Because IoT data comes in very large volumes, performing real-time analytics requires the ability to run enrichments and ingestion in sub-second latency so that the data is ready to be consumed in real time. Also, many customers want to operationalize ML models such as anomaly detection in real time so that they can take preventive steps before it is too late.

4.Address data drift: Data coming from IoT systems can change over time due to events such as firmware upgrades. This is called data drift or schema drift. It is important that your data management solution can automatically address data drift without interrupting the data management process.

5.Real-time monitoring and alerting: IoT data ingestion and processing never stops. Therefore, your data management solution should provide real-time monitoring with flow visualizations to show the status of the process at any time with respect to performance and throughput. The data management solution should also provide alerts in case any issues arise during the process.